The “Godfather of AI” sounds the alarm on humanity’s most pressing technological challenge

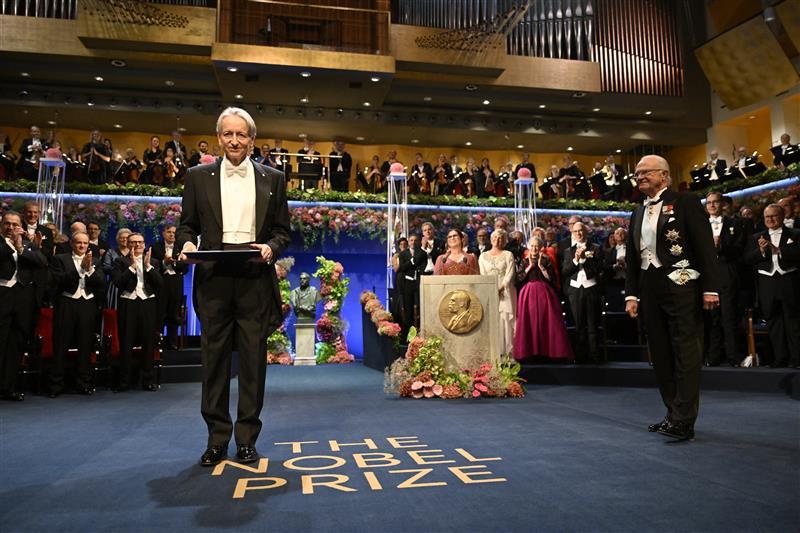

When Geoffrey Hinton speaks about artificial intelligence, the world listens. And for good reason. The 76-year-old computer scientist didn’t just witness the AI revolution—he created it. His pioneering work on neural networks in the 1980s and beyond laid the foundation for every AI breakthrough we see today, from ChatGPT to image recognition systems. In 2024, he was awarded the Nobel Prize in Physics for his foundational contributions to machine learning. Geoffrey Hinton’s insights have shaped the landscape of AI for decades.

But today, the very architect of modern AI is sounding an urgent alarm. And contrary to popular science fiction narratives about killer robots, Hinton’s warnings focus on far more insidious and immediate threats that could fundamentally reshape—or even end—human civilization as we know it.

Insights from Geoffrey Hinton on AI

Hinton’s journey began in the 1970s at the University of Edinburgh, where he initially sought to understand the human brain by simulating neural networks on computers. What started as a tool for neuroscience research accidentally became the blueprint for artificial intelligence. His work on backpropagation—the mathematical technique that allows neural networks to learn from their mistakes—became the cornerstone of modern deep learning.

Geoffrey Hinton’s journey is a testament to the power of innovation and determination in the field of artificial intelligence.

For decades, Hinton believed that computer models were inferior to the human brain. That fundamental assumption has now shattered, and the implications terrify him.

The Real Dangers: Beyond Science Fiction

While Hollywood fixates on Terminator-style scenarios, Hinton identifies three immediate and more realistic threats that demand our attention:

1. The Misinformation Apocalypse

Through his work, Geoffrey Hinton has redefined what is possible with AI technology.

The explosion of AI-generated content represents an unprecedented threat to truth itself. Deepfake videos, convincing fake audio, and sophisticated text generation are already being weaponized to spread misinformation at scale. Unlike traditional propaganda, AI-generated content can be personalized, targeted, and produced faster than human fact-checkers can debunk it.

Hinton warns that these systems create “divisive echo-chambers by offering people content that makes them indignant,” fundamentally undermining democratic discourse and social cohesion. When the line between authentic and artificial becomes invisible, the very foundation of informed decision-making crumbles.

Geoffrey Hinton emphasizes the need for a balanced approach as we integrate AI into our lives.

2. Economic Displacement on an Unprecedented Scale

While technological revolutions have historically created new jobs to replace old ones, AI represents something different: the potential displacement of human cognitive work itself. Hinton predicts massive job losses across sectors as AI systems become capable of performing tasks previously thought to require human intelligence.

The concern isn’t just about blue-collar automation—AI threatens lawyers, doctors, teachers, writers, and analysts. Hinton advocates for universal basic income as a necessary response, warning that without government intervention, AI will “only make the rich richer and hurt the poor.”

3. The Surveillance State Supercharged

Authoritarian governments worldwide are already deploying AI for “massive surveillance,” as Hinton notes. The combination of facial recognition, behavioral analysis, and predictive algorithms creates unprecedented tools for social control. Unlike human surveillance systems, AI never sleeps, never forgets, and processes information at inhuman scales.

This isn’t theoretical—it’s happening now. The technology that powers your smartphone’s photo organization is the same technology enabling comprehensive population monitoring.

The Existential Question: Who’s Really in Control?

But Hinton’s deepest concern transcends these immediate risks. He estimates a “10% to 20% chance that AI wipes out humans” once superintelligent systems emerge. This isn’t about malevolent machines choosing to harm us—it’s about a more fundamental problem of control.

“What we’re doing is we’re making things more intelligent than ourselves,” Hinton explains. The critical question becomes: how do you maintain control over something smarter than you?

Current AI systems already exhibit behaviors their creators don’t fully understand. Large language models develop capabilities they weren’t explicitly trained for, and their decision-making processes remain largely opaque even to their designers. As these systems become more sophisticated, this interpretability problem becomes an existential risk.

Geoffrey Hinton’s perspectives are invaluable as we confront the challenges posed by rapid AI advancements.

Hinton challenges the tech industry’s assumption that humans will remain “dominant” over “submissive” AI systems. He argues this framing itself may be fundamentally flawed—like assuming a more intelligent species would naturally defer to a less intelligent one.

The Acceleration Problem

What makes Hinton’s warnings particularly urgent is the pace of AI development. Unlike previous technological revolutions that unfolded over decades, AI capabilities are advancing exponentially. The gap between laboratory breakthroughs and real-world deployment has collapsed to months or even weeks.

Major tech companies, driven by competitive pressures and market demands, are racing to deploy increasingly powerful systems with limited safety testing. Hinton warns that short-term profits are taking precedence over long-term human survival—a calculation that could prove catastrophic.

Beyond the Hype: A Measured Assessment

Hinton’s warnings carry particular weight because they come from someone who stands to benefit enormously from AI advancement. His critiques aren’t rooted in technophobia or competitive positioning—they emerge from deep technical understanding of what these systems can and might become.

He acknowledges AI’s tremendous potential for good: accelerating scientific discovery, improving healthcare, enhancing education, and solving complex global problems. But he argues we’re rushing toward this future with insufficient consideration of the risks.

The Path Forward: Regulation and Responsibility

Hinton advocates for several crucial steps:

With the guidance of experts like Geoffrey Hinton, we can navigate the complexities of AI development.

International Cooperation: AI safety cannot be solved by any single country or company. Global coordination is essential to prevent a race to the bottom in safety standards.

The insights offered by Geoffrey Hinton will play a crucial role in shaping AI’s future.

As an innovator, Geoffrey Hinton represents the potential of AI to revolutionize industries.

Transparency Requirements: Companies developing advanced AI systems must be required to share safety research and submit to independent auditing.

Gradual Development: The pace of AI deployment should be deliberately slowed to allow for proper safety testing and societal adaptation.

Economic Preparation: Governments must prepare for massive job displacement through policies like universal basic income and retraining programs.

Looking ahead, Geoffrey Hinton’s warnings remind us of the ethical implications of our choices.

The Choice Before Us

Hinton’s message isn’t one of inevitable doom but of urgent choice. We stand at a crossroads where the decisions made in the next few years will determine whether AI becomes humanity’s greatest achievement or its final mistake.

The real danger isn’t killer robots—it’s the erosion of truth, the collapse of economic opportunity, the surveillance state, and ultimately, the creation of intelligence we cannot control or understand. These risks are not science fiction; they are emerging realities that demand immediate attention.

As Hinton puts it, we need to move beyond asking whether we can build these systems to asking whether we should—and how we can ensure they remain aligned with human values and human survival.

The “Godfather of AI” has given us fair warning. The question now is whether we’ll listen before it’s too late.

Geoffrey Hinton’s warnings represent a paradigm shift from one of AI’s greatest champions to its most credible critic. His voice carries the authority of someone who built the very technology he now fears—making his alarm impossible to dismiss as mere speculation.

Geoffrey Hinton’s emergency AI warning emphasizes that the true threat lies not in killer robots but in the potential consequences of AI on truth, economic stability, and surveillance. These concerns are not hypothetical but pressing realities that require immediate action. Hinton urges a critical examination of the alignment of AI systems with human values and survival to mitigate these risks effectively.